The International 10 - Main Event Simulation

With the group stage of TI10 wrapped up, it's on to the main event! I simulated the possible outcomes of the bracket. PSG.LGD are looking like the favorites, but with 21 matches to go, the scene is ripe for some upsets.

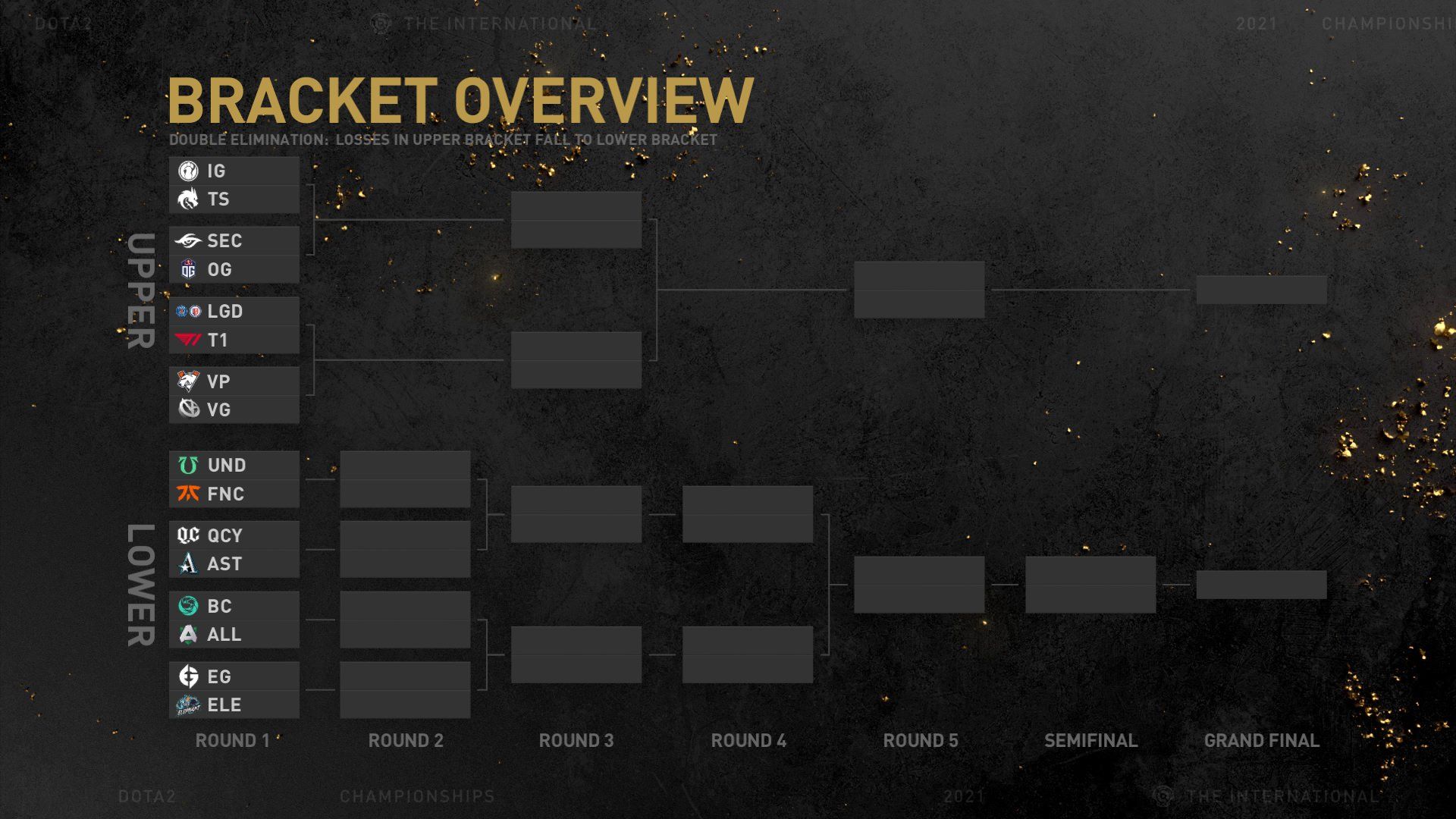

The bracket

First off, let's take a look at the bracket:

The first round of the lower bracket is a best-of-one, so all eight of those teams are in for an anxious start to the tournament. All other matches are best-of-three, except for the best-of-five grand final.

Simulating the bracket

I ran this bracket, along with my model's matchup predictions, through 50000 Monte Carlo simulations to see how well each team would fare. Here was each team's shot at taking home the Aegis, as well as their average placement:

| Team Name | % 1st Place | Median Place |

|---|---|---|

| PSG.LGD | 39.46% | 2nd |

| Team Secret | 16.63% | 3rd |

| Vici Gaming | 13.38% | 4th |

| Virtus.pro | 8.74% | 5th-6th |

| Team Spirit | 8.49% | 5th-6th |

| Invictus Gaming | 5.69% | 5th-6th |

| T1 | 2.56% | 7th-8th |

| OG | 2.07% | 7th-8th |

| Undying | 1.40% | 9th-12th |

| Quincy Crew | 0.56% | 9th-12th |

| Team Aster | 0.45% | 13th-16th |

| Evil Geniuses | 0.27% | 9th-12th |

| Elephant | 0.22% | 13th-16th |

| Alliance | 0.03% | 9th-12th |

| Fnatic | 0.03% | 13th-16th |

| beastcoast | 0.01% | 13th-16th |

In almost 40% of my simulations, PSG.LGD took first place, which is staggering. With the incredibly long journey from the lower bracket to the grand final, all of the lower bracket teams have worse chances than any upper bracket team. No team in the lower bracket is strong enough to consistently push through the rest.

Naturally, starting in the upper bracket is a huge advantage. With the record-breaking $40 million prize pool, you don't have to win the Aegis to walk home a millionaire, and upper bracket teams are much more likely to end up farther. Of course, at the end of the day most people remember the winner, and that's the main goal of each of these teams, but third place still gets you a medal at the Olympics. I doubt any team would be actively unhappy with a top three finish - they might be disappointed that they didn't do better, but they wouldn't consider their run a failure either. Here's how likely each team is to place in the top three:

| Team Name | % Top 3 |

|---|---|

| PSG.LGD | 68.41% |

| Team Secret | 51.01% |

| Vici Gaming | 37.94% |

| Team Spirit | 36.86% |

| Invictus Gaming | 29.70% |

| Virtus.pro | 29.66% |

| OG | 15.70% |

| T1 | 13.62% |

| Undying | 6.12% |

| Quincy Crew | 3.27% |

| Team Aster | 2.65% |

| Evil Geniuses | 2.07% |

| Elephant | 1.79% |

| Alliance | 0.54% |

| Fnatic | 0.36% |

| beastcoast | 0.30% |

Again, we see the expected upper/lower bracket split. PSG.LGD and Team Secret are still the most likely candidates.

Vici Gaming and Team Spirit, who are next in line for third place, are very close contenders here, and yet Vici have a significantly higher chance than Team Spirit to finish first.

While Virtus.pro were the fourth most likely to take first, they drop down to sixth most likely to finish in the top three.

There are a couple of other such shuffles, and it all comes down to the bracket itself. Depending on when some specific matchups happen for a given team, they might find themselves bottlenecked at various points in the bracket. Of course, we'll just have to wait to see how it plays out!

With 21 matches, there are 2^21 = 2,097,152 possible ways this bracket could play out (more if you consider different possible records for each match, but that doesn't affect anybody's placement). Some are obviously much more likely than others (sorry, beastcoast, you aren't too likely to stage seven upsets in a row). The takeaway is that nobody can predict exactly what will happen, so just sit back and enjoy the show!

Model caveats

There are a few caveats to the model, which are probably most obvious in its assessments of OG and Undying. While strength of region (and where a team has played its recent games) are important factors to the model, the calibration has found that, on average, recent performance is one of the strongest indicators of success.

The model is designed to have the highest possible predictive power when applied to all pro games, and the DPC circuit and other more localized tournaments make up the bulk of games played. This isn't inherently a problem, but there will always be some specific situations that are fundamentally different, and there could never be enough data to properly take them into account:

Case in point, OG: they have had fairly mediocre performance, recently. Their recent accolades are certainly not what you would expect of a two-time TI winner. And yet, everybody knows that when TI comes around, OG know how to turn it up to 11. There's nowhere near enough good data for a model to ever find that, which is why good old-fashioned qualitative analysis still has room in the world. I could have manually adjusted some predictions, but there's no objective way to do so. One of the strengths of a model-based approach is that it can be automated to make predictions for thousand of matches, and a person would not have that kind of bandwidth. You win some, you lose some.

All of that is to say that yes, some of the outputs are a bit strange. However, it's generally much safer to trust the model than to try to guess which situations it's incorrect about.

As always, keep up with our suggested bets to see how our predictions stack up against the market, and where we would suggest staking your money.

For more in-depth match analysis, discussion, and predictions, as well as quantitative betting strategy tips, subscribe to stay in the loop! You can also follow us on Twitter or Facebook for quick prediction snapshots of upcoming matches. Alacrity is your fastest path from simply gambling to beating the market. Stay ahead of the game!